Weekend Links #5: Trump Fights Zelenskyy, Meta builds AI Glasses

Also Claude plays Pokémon and Microsoft is skeptical of AI hype

A good forecaster reads constantly. However, like the power laws this blog is named for, some readings matter far more than others. Each weekend, I'll share the standouts …along with some occasional fascinating rabbit holes. Some weekends will be light, others heavy (that's how power laws work), but each link will be worth your weekend morning coffee time.

Geopolitics

…Reacting to a heated Trump-Zelenskyy exchange

It’s quite annoying as a blogger to try to write a timely article and then have the article be upended by events just a few hours later. Friday morning, I wrote “Ukraine's Endgame: Four Scenarios for 2025” about Ukraine prospects, discussing an upcoming meeting with Trump. The meeting began cordially but deteriorated when Vice President Vance deliberately put Zelenskyy on the spot, demanding gratitude. Zelenskyy tried to explain that a simple ceasefire wouldn't work because Putin has violated many past ceasefires and Ukraine needs more concrete guarantees. In response, Trump became visibly irritated, culminated in pointing at Zelenskyy and accusing him of “gambling with World War III.”

This exchange has prompted several people to ask me how this affects my predictions about Ukraine. The answer, in my opinion, is that it is premature to fixate too much on any particular event in the day-by-day news cycle dominated by President Trump. It’s a common “recency bias”-style forecasting error to overreact to news. This is especially true with Trump, who is known to keep his options open and change his opinions easily — keep in mind that just a week ago Trump was calling Zelenskyy a dictator and repeating pro-Kremlin talking points before walking back those statements and meeting with Zelenskyy in the Oval Office. This heated exchange represents a reversion to Trump's stance from the prior week. It seems entirely plausible that within another week, Trump's reactions to Zelenskyy could again shift dramatically.

Let’s look more to the fundamentals:

Trump wants to be seen as an independent arbiter. In the exchange, Trump's positions himself as a deal-maker. When directly asked if he was on Ukraine's side, Trump replied, “I'm in the middle. I want to solve this thing. I'm for both.” This aligns with his statement that he hopes to be “remembered as a peacemaker.” While his positions on specific issues shift, this mediator role remains central to his approach.

Trump wants to peel Russia off from China. This is part of the “Russian Reset” I mentioned in my prior post. For Trump, this likely involves trying to get on Putin’s good side by saying nice things about Putin and helping Putin denigrate Zelenskyy.

Trump wants the war to end soon. This could either be a part of pivoting to China or isolationism. Either way, Trump doesn’t have appetite for a sustained US support for conflict in Ukraine.

Trump wants Europe to handle more of Ukraine’s defense. This is the continuation of a long US push, but Trump is doing this in a significantly more confrontational, “bad cop” way.

Trump has to be really careful to not be seen as “losing the war”. While Trump may be able to spin a Ukraine loss as Ukraine's fault for not making a deal, he would likely face negative public perception if Ukraine falls more significantly to Russia. Just look to how the public viewed Biden after the botched withdrawal from Afghanistan.

Instead, my biggest change is seeing that the issue of security guarantees could be a bigger disagreement between Trump and Zelenskyy than I first thought — Trump dismissed their importance, calling them “2% of the problem”, when in reality they are fundamental to properly resolving the conflict. However, I think Trump is mainly against US peacekeepers securing a ceasefire, but is open to European peacekeepers — and European peacekeepers and European security guarantees still could still be a basis for a workable ceasefire.

Based on these fundamentals, I think it’s likely that the mineral deal will be picked back up again and the path to peace may continue. I also hope that Europe will react to this situation by planning to allocate more spending and manufacturing to Ukraine defense. Lastly, my predictions already had a substantial chance that no ceasefire can be reached within the year. Thus I am still keeping my predictions the same, despite this latest setback.

AI

…Inside Meta’s AI push

I see five key companies with the talent, GPUs, and annual investment needed to make major progress on AI — OpenAI, Anthropic, Google DeepMind, xAI, and Meta — with the outside possibility that Mistral or DeepSeek could join that list. I think seeing Grok 3 made me right to be bullish on xAI. The main company we haven’t seen back up my assessment so far is Meta, who has been seriously lacking in their product quality.

Zuckerberg wants to change that. In his earnings call in January, Zuckerberg said:

I expect this is going to be the year when a highly intelligent and personalized AI assistant reaches more than 1 billion people, and I expect Meta AI to be that leading AI assistant.

Meta AI is already used by more people than any other assistant, and once a service reaches that kind of scale it usually develops a durable long-term advantage. We have a really exciting roadmap for this year with a unique vision focused on personalization.

~

…xAI can search Tweets, Google DeepMind can power Google Suite, and OpenAI can power Microsoft products. What is “Meta’s killer feature” going to be? Potentially personalization:

We believe that people don't all want to use the same AI — people want their AI to be personalized to their context, their interests, their personality, their culture, and how they think about the world.

…Also a continued investment in open source:

I think this will very well be the year when Llama and open source become the most advanced and widely used AI models as well. […] Our goal with Llama 3 was to make open source competitive with closed models, and our goal for Llama 4 is to lead.

~

Training Llama 4, Meta’s next generation model, is in progress:

Llama 4 is making great progress in training. Llama 4 mini is done with pre-training and our reasoning models and larger model are looking good too. […] Llama 4 will be natively multimodal -- it's an omni-model -- and it will have agentic capabilities, so it's going to be novel and it's going to unlock a lot of new use cases. I'm looking forward to sharing more of our plan for the year on that over the next couple of months.

Looks like Llama 4 will come with a lot of the bells and whistles we’ve expected, including multimodal reasoning (e.g., images and text) and deeper reasoning. However, I’m curious what those “agentic capabilities” and “new use cases” might be. Perhaps they might try to move Llama 4 in the way of OpenAI’s Operator, being able to take actions on the internet and help you buy things and do internet tasks?

~

…Meta is also investing heavily in building more data centers to power this:

These are all big investments -- especially the hundreds of billions of dollars that we will invest in AI infrastructure over the long term. I announced last week that we expect to bring online almost 1GW of capacity this year, and we're building a 2GW and potentially bigger AI datacenter that is so big that it'll cover a significant part of Manhattan if it were placed there.

Separately, The Information reports Meta is planning a data center buildout that would rival OpenAI’s Stargate.

~

Meta is also looking to follow in the footsteps of OpenAI and Anthropic and really dig into coding:

I also expect that 2025 will be the year when it becomes possible to build an AI engineering agent that has coding and problem-solving abilities of around a good mid-level engineer. This is going to be a profound milestone and potentially one of the most important innovations in history, as well as over time, potentially a very large market. Whichever company builds this first I think is going to have a meaningful advantage in deploying it to advance their AI research and shape the field. So that's another reason why I think that this year is going to set the course for the future.

~

And it wouldn’t be Zuckerberg without a focus on AI glasses:

Our Ray-Ban Meta AI glasses are a real hit, and this will be the year when we understand the trajectory for AI glasses as a category. […]

I've said for a while that I think that glasses are the ideal form factor for an AI device because you can let an AI assistant on your glasses see what you see and hear what you hear, which gives it the context to be able to understand everything that's going on in your life that you would want to talk to it about and get context on. […]

It's kind of hard for me to imagine that a decade or more from now all the glasses aren't going to basically be AI glasses as well as a lot of people who don't wear glasses today, finding that to be a useful thing.

~

…And maybe a Meta AI app?

While not in the earnings call, elsewhere CNBC has reported that Meta may release a standalone Meta AI app in 2025Q2 effort to compete with OpenAI's ChatGPT. Additionally, Meta also intends to test a paid subscription service for Meta AI, following OpenAI and Microsoft's model of charging for access to more powerful versions of their AI tools.

~

…Explaining the OpenAI - Microsoft breakup

Nearly a year ago, it was reported that Microsoft and OpenAI were jointly planning a $100B data center referred to as “Stargate”. Nearly one year later, Sam Altman alongside Trump announces Stargate publicly, this time with much of the financial backing coming from Oracle and SoftBank. This led to an important mystery — Where did Microsoft go?

It looks like the answer is that Microsoft got cold feet and pulled out. According to New York Times reporting, part of the problem was that Microsoft CEO Satya Nadella was spooked by Altman’s attempted firing by the OpenAI board in November 2023.

It also seems like Microsoft thought that the potential monetary return from building hundred billion dollar data centers for OpenAI was suspect. The Information reports:

[A]t Microsoft, executives were studying whether building large data centers for OpenAI would even pay off in the long run[. …] Microsoft’s cloud computing chief Scott Guthrie, for instance, instructed deputies to forecast future demand for such facilities from other AI customers who might use them after OpenAI did, to ensure they wouldn’t sit idle, one of these people said.

[...] The software giant permitted the Abilene deal because CEO Satya Nadella viewed it as a one-off. But Altman quickly determined that OpenAI would need even more computing clusters to develop AI that exceeded human performance. [...] The companies then wrangled over various aspects of their partnership, including Microsoft’s status as OpenAI’s exclusive cloud provider. At the end of December, they amended the deal to remove the cloud-related clause, according to someone who spoke to Nadella, and Altman took his Stargate dream elsewhere [to Oracle and SoftBank]. […] Nadella also justified the change to the deal as a way for Microsoft to continue to benefit from new models that OpenAI has trained without having to shoulder the cost of building massive AI training clusters.

[…] Ellison, who has been friendly with Trump for decades and is also close to Musk, now has an incentive to protect OpenAI. Oracle stock has risen 16% since the announcement, a nice lift for a company that was at risk of falling behind in the AI race due to its limited resources compared to cloud providers like Microsoft.

~

This OpenAI shift from Microsoft to SoftBank is real. The Information reports that OpenAI is strategically shifting its computing power alliance from Microsoft to SoftBank:

OpenAI projecting that SoftBank's Stargate data center project will support 75% of its computing needs by 2030.

SoftBank is expected to provide at least $30 billion of the $40 billion OpenAI is raising, valuing the company at $260 billion.

And one-third of 2025's revenue growth will come from SoftBank using OpenAI products across its companies.

~

But the question is why is Microsoft doing this shift away from OpenAI? It seems like Nadella is just not a true believer in the massive AI transformation and money machine that Altman is pitching. Look to the interview between Nadella and Dwarkesh Patel. Unlike Sam Altman, Daro Amodei, or even the relatively restrained Demis Hassabis, Nadella has pretty tame views of how much AI might transform the future, seems to describe AI as overhyped, and notes he is limiting his own investment and leasing capacity to those who are overinvesting, avoiding buying things that he has to pay for permanently. From the interview, Nadella states:

[T]here will be overbuild. To your point about what happened in the dotcom era, the memo has gone out that, hey, you know, you need more energy, and you need more compute. Thank God for it. So, everybody's going to race. […] In fact, it's not just companies deploying, countries are going to deploy capital, and there will be clearly... I'm so excited to be a leaser, because, by the way; I build a lot, I lease a lot. I am thrilled that I'm going to be leasing a lot of capacity in '27, '28 because I look at the builds, and I'm saying, "This is fantastic." The only thing that's going to happen with all the compute builds is the prices are going to come down.

~

And now there’s reporting that apparently Microsoft is paying a premium to get out of data center related buildouts:

Microsoft Corp. has canceled some leases for US data center capacity, according to TD Cowen, raising broader concerns over whether it’s securing more AI computing capacity than it needs in the long term. OpenAI’s biggest backer has voided leases in the US totaling “a couple of hundred megawatts” of capacity […]

In Friday’s report, TD Cowen’s analysts wrote that their channel checks had unearthed a number of signs that Microsoft is gradually retreating. They learned that Microsoft had let more than a gigawatt of agreements on larger sites expire and walked away from “multiple” deals involving about 100 megawatts each. […]

TD Cowen said Microsoft used facility and power delays as justification for the termination of leases. That was a tactic rivals such as Meta previously employed when curbing capital spending […]

Exactly why Microsoft may be pulling some leases is unclear. TD Cowen posited in a second report on Monday that OpenAI is shifting workloads from Microsoft to Oracle Corp. as part of a relatively new partnership.

The Information reports that Microsoft is still continuing it’s $80 billion annual spend on data centers, though Microsoft claims “there was less demand for generative artificial intelligence than Microsoft initially forecasted” and that Microsoft was worried about “building too many facilities”.

This is a different vibe from Amazon's Andy Jassy, who describes their demand as completely “insatiable”, and Sam Altman says OpenAI faces GPU shortages and is trying to buy hundreds of thousands more chips.

Time will tell whether this was one of the most epic misses by Microsoft to not be front-and-center in the AI boom or whether they were correctly and strategically hedging their risks.

~

…New Anthropic Model, new Amodei podcast

This week, Anthropic released their newest model, Claude 3.7 Sonnet — combining extended thinking with simple responses in one model, as I mentioned in my last weekend links.

It’s a good model that is my main frontline model for most use cases, especially code and writing, though I also like o1 pro and OpenAI Deep Research for heavier tasks and o3-mini-high or Grok 3 for when search is important (Grok 3 is especially good for being able to search tweets).

To discuss it, Dario Amodei joined the Hard Fork podcast. Some of my favorite takeaways:

“3.7 Sonnet” is a major update, but not a brand new large-base model. 3.7 Sonnet is smaller in size than Grok 3 and GPT 4.5. Amodei hints that truly large-scale “Claude 4” is coming soon, likely using the new clusters from Amazon.

Currently you have to manually tell Claude 3.7 whether to use extended thinking or not. According to Amodei, the future goal is for the model itself to gauge how long it needs to think.

Why can’t Claude search the web like every other model? Amodei states this is “coming very soon” and the main reason is that Claude focuses most on Enterprise use cases where search isn’t a priority.

Amodei is 70-80% confident we’ll see extremely powerful AI by the end of 2027. Similarly, the Claude launch predicts that 2027-era Claude would be able to quickly find breakthrough solutions to challenging problems that would have taken human teams years to achieve. If this is true, that’s wild! That’s less than three years away!

Amodei sees no “immediate lethal dangers,” in Claude 3.7 but is worried about future models — saying these could facilitate new types of bioweapons or other large-scale harms. I discuss this sort of thing in “AI security is important practice for when stakes go up”.

Amodei sees a 10–25% chance of negative “civilizational derailment” by AI. He sees risk rising in step with progress, but he’s also encouraged by some recent research into technical safety mitigations that may lower the risk.

Amodei wants a middle path on the doomer vs. acceleration camp: address real risks (like potential doomsday applications) without stifling beneficial uses.

What does Amodei think you should do about rapid AI progress? Do the things you would do anyway — stay healthy, stay informed, keep track of rapid changes. Also focus on critical thinking because soon you’ll be surrounded by AI content — some from helpful AI and some from malicious AI.

Amodei is disappointed by the Paris Summit, finding it to be more “like a trade show” with little real discussion of the heavy risks involved with AI like the prior summits focused on.

Ironically GitHub seems to use Claude for (at least some) real-life coding workflows, despite being owned by Microsoft.

~

…Claude plays Pokémon

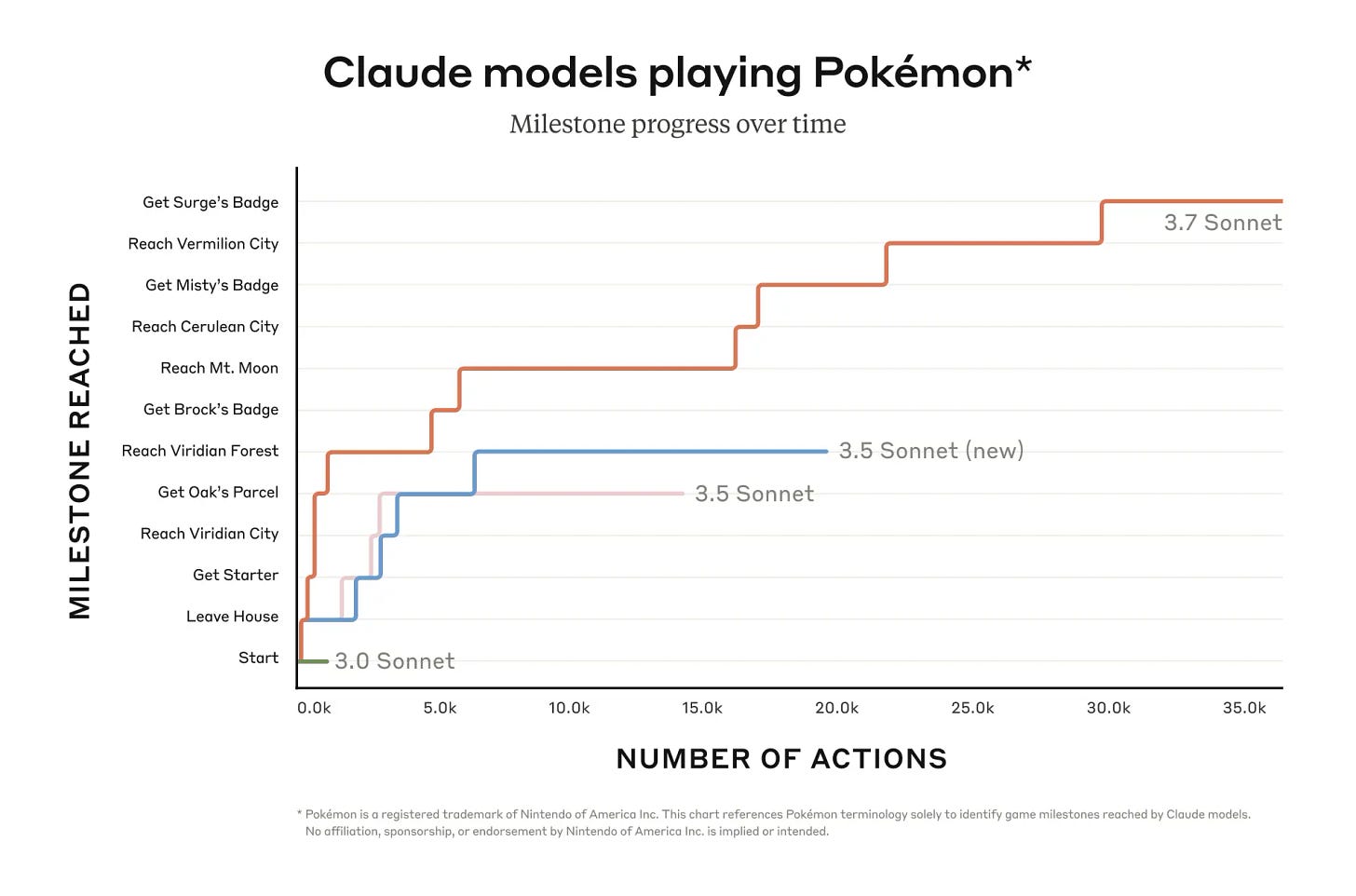

Due to Claude’s extended thinking, it can finally prevail further in the most important evaluation of them all — beating the Elite Four in Pokémon Red.

Without extended thinking, it looks like “Claude 3.5 Sonnet (new)” got stuck in the Viridian Forest. Whereas 20,000 button clicks (about three days of continuous gameplay) was enough to get Claude 3.7 through to get the first badge and through Mt. Moon.

The way this system works is that Claude reasons about the current goals and board state and then takes one action at a time. Claude can then write key information to a knowledge base and also retrieve this information to advise its strategy. It gets some visual assistance from a tool helping it understand what parts of the map are navigable.

Claude is streaming on Twitch now, where as of the time of writing Claude had just defeated Misty to get his second gym badge. Per the earlier testing it seems like Claude 3.7 can get the first three badges and then gets seriously stuck somewhere. If Mt. Moon is any indication, I’m guessing Claude 3.7 won’t be able to get through Rock Tunnel. Looks like we will see what happens on the stream in another four days or so.

What makes this interesting is that there’s a long history of AI doing well at games, but typically this involves a lot of training on the exact game itself, whereas Claude has never trained on Pokémon explicitly. What also makes this interesting is that this is a game that Claude is not doing well on, with eight-year old children being able to beat Claude on this task easily.

And Claude does have a lot of Pokemon-related facts in its training data and has a quite good sense of what needs to be done, whereas human children can beat this game with minimal prior information. I'd be pretty curious to see it play a video game that it has zero info about in the training data and involves more novel mechanics.

~

…Claude powers Alexa

Alexa+ is coming soon, powered by Claude. It will be really interesting to see how this works in practice and at scale. It will cost $20 a month, but is free with Prime. I’ve always been surprised by how bad voice assistants have been despite AI advancing dramatically and I’ll be curious to see if Anthropic can do any better.

~

…Elon Musk and Joe Rogan on AI risk

Elon Musk went on the Joe Rogan podcast and discussed Grok, Fort Knox, DOGE, Soros, Epstein's Client List, SpaceX, and much more. However, given Musk’s prior track record on the issue and position of great political and economic influence, I was particularly interested in his comments on AI. Here are some interesting tidbits:

Musk thinks that AI will be smarter than any human next year and smarter than all humans combined by 2030. I imagine he’s off on at least the first prediction and he does have a past track record of estimating that tech achievements happen earlier than they do.

Musk sees AI as “either super awesome or super bad. It’s not going to be something in the middle.” He thinks it’s 80% likely to be in the “super awesome” category, with a 20% chance of it being “super bad”.

According to Musk, super oppressive AI is one of the risks: “One of the concerns would be, like, okay, if there’s a super oppressive, ‘woke nanny AI’ that is omnipotent, that would be a miserable outcome. […] If you program an AI and say, like, the only acceptable outcome is a diverse outcome […] AI could just execute a bunch of people to achieve that outcome.”

Musk doesn’t say anything about what, if anything, should be done about these risks.

~

…Meanwhile in China

We are seeing more signs of AI investment in China. Alibaba said Monday it will spend more than $53 billion on projects like data centers over the next three years— the most ever spent by a private Chinese company on AI hardware. That’s $17.7B a year, which is much less than the spend of American companies but it’s possible this could be boosted a good bit by the fact that money can buy more per dollar in China.

More interestingly, Alibaba CEO Eddie Wu has stated that his goal is to build AGI, or AI that can replace all human labor: “Our first and foremost goal is to pursue AGI … We aim to continue to develop models that extend the boundaries of intelligence.”

DeepSeek CEO Liang Wenfeng is also focused on building AGI and thinks this will be accomplished within 2-10 years. US companies like OpenAI and Anthropic aren’t the only companies with lofty AI goals.

~

Get hired to do cool AI stuff

METR is an independent non-profit doing evaluations to learn about the state of AI progress. They are currently running a pilot field experiment to measure how AI tools affect open source developer productivity. If you're an open source developer who wants to make $150/hour to work on issues of your own choosing, consider expressing interest.

You must:

Have at least 1 year of professional experience as a software engineer

Have at least 6 months experience as an active maintainer of the repository

~

Whimsy

…Death by asteroid canceled

For a brief window it looked like Asteroid 2024 YR4 was potentially going to impact Earth, with an impact probability of about 3%. Now, it has fallen lower than 0.001%.

This was an interesting window to check planetary defense in action. This has improved a lot over recent years, with NASA establishing monitoring systems like Sentry, creating the Planetary Defense Coordination Office, and successfully demonstrating a prototype of asteroid deflection with the 2022 DART mission. When YR4 was detected with a >1% impact probability, it triggered a global alert among space agencies, with astronomers using the James Webb Space Telescope for detailed study and UN-sponsored groups coordinating international response. This is amazing progress and perhaps some of our best work to combat potential risks to human extinction. Nonetheless, we can do more — catalog more asteroids and prepare better tests of asteroid deflection.

~

…Marble races

I’ve recently been enjoying this YouTube channel “Jell’s Marble Runs” where marbles are set to run and commentated on as if they were racecars. It’s very fun:

Thoughtful and insightful content as usual. Maybe the best yet! Thanks. Especially appreciated Unraine versus Recency bias. This is a counterweight to the European nihilism I’m seeing.

Seems premature to count out some of the bigger chinese tech companies like Bytedance which has the chatGPT of China in terms of consumer app usage and pretty good models.