OpenAI, NVIDIA, and Oracle: Breaking Down $100B Bets on AGI

How vendor financing turns the S&P 500 into a giant AGI bet

About the author: Peter Wildeford is a top forecaster, ranked top 1% every year since 2022.

In 1941, the US1 embarked on the Manhattan Project — a massive scientific and infrastructure project that produced a working nuclear bomb in less than four years. They spent ~$2B in the process. With inflation, this would be about $37B in today’s money. It was one of the most expensive scientific projects of all time.

Today, there is another project at an even bigger scale, except it is happening in the private sector and it’s for building AGI.

And it’s an even larger gamble — a $400+ billion web of circular financing deals between OpenAI, NVIDIA, and Oracle that makes everyone’s valuations contingent on AGI arriving on schedule. This financial engineering has transformed 25% of the S&P 500 into a leveraged bet that AI scaling will continue unabated through 2030. The math only works if AGI arrives before the money runs out. The crazy thing is that this all might just actually work.

Two weeks ago, Oracle signed a $300 billion, five-year computing power deal with OpenAI. The contract was the largest cloud deal ever signed. The resulting bump in Oracle stock briefly made Oracle CEO Larry Ellison the world’s richest man. The agreement requires 4.5 gigawatts of electricity with the contract starting in 2027, roughly equal to what 4 million homes consume. This would mean OpenAI paying $60B per year starting in 2028, or almost two Manhattan Projects annually.

But Oracle quickly got outgunned by another deal. On Monday, NVIDIA announced a letter of intent to invest up to $100B in OpenAI. NVIDIA invests cash in exchange for non-voting shares and OpenAI has committed to using that cash to buy NVIDIA’s chips. So far, Nvidia has committed the first $10B. We don’t yet know how the remaining $90B will be spread across years or how many years the investment will be over …or if it will even actually happen. What we do know is that this is a massive commitment for computing power.

What does this mean for the future of AI? Let’s dig in.

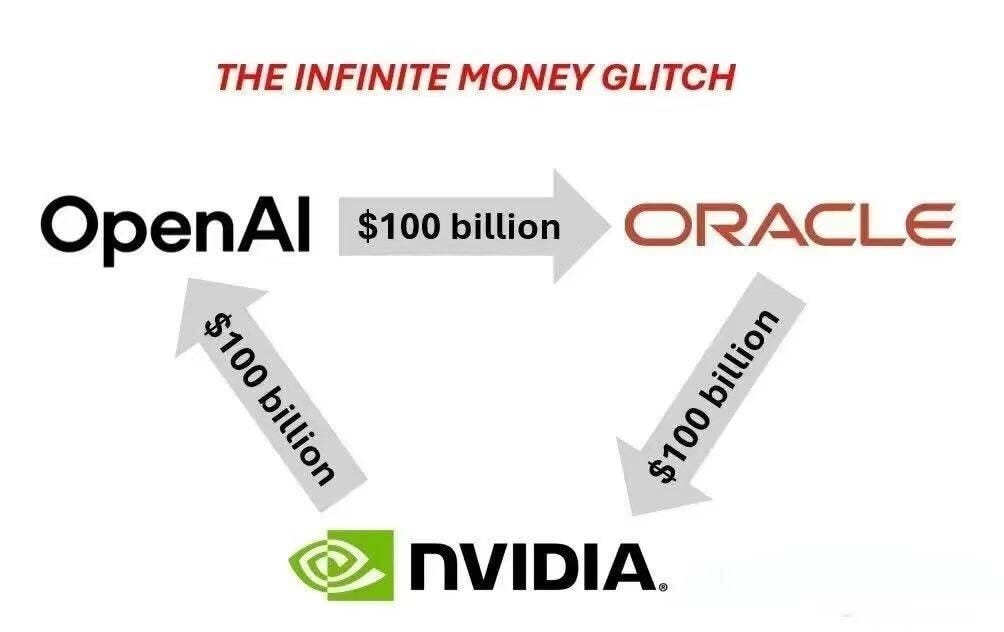

The Infinite Money Glitch

The structure of this financing warrants closer inspection, as it contains significant risks.

This is because there is another key deal that gives the OpenAI-Oracle deal and the OpenAI-NVIDIA deal more context — a third deal announced back in May where Oracle promises to spend $40B purchasing NVIDIA’s GB200 GPUs for an OpenAI data center in Abilene TX as part of the Stargate project. This is a 15-year lease agreement where Oracle purchases the chips and then leases the computing power to OpenAI.

This leads to what Semianalysis’s Dylan Patel calls the “Infinite Money Glitch”:

Here’s how it works:

NVIDIA invests capital in OpenAI, which OpenAI then uses to purchase NVIDIA hardware directly and purchase Oracle cloud compute.

Oracle also uses the revenue from the cloud compute deals to purchase NVIDIA hardware.

NVIDIA books all of this as additional revenue, despite the revenue being spurred on in significant part by its original investment.

This new revenue helps support NVIDIA’s valuation, which in turn makes its stock more valuable as currency for future investments.

The additional increase in stock price allows NVIDIA to afford to invest even more in the next revenue round-trip.

The same happens for Oracle and OpenAI too.

Critically, the same money moves around in just one circle, but all of a sudden everyone’s valuations go up. It’s a virtuous cycle — as long as the music keeps playing.

NVIDIA has already executed smaller versions of this playbook with CoreWeave, Crusoe, xAI, and Lambda Labs, but this is next level. $100 billion represents roughly 3% of NVIDIA’s current market cap, and much bigger than all of the CoreWeave, Crusoe, xAI, and Lambda Lab deals combined.

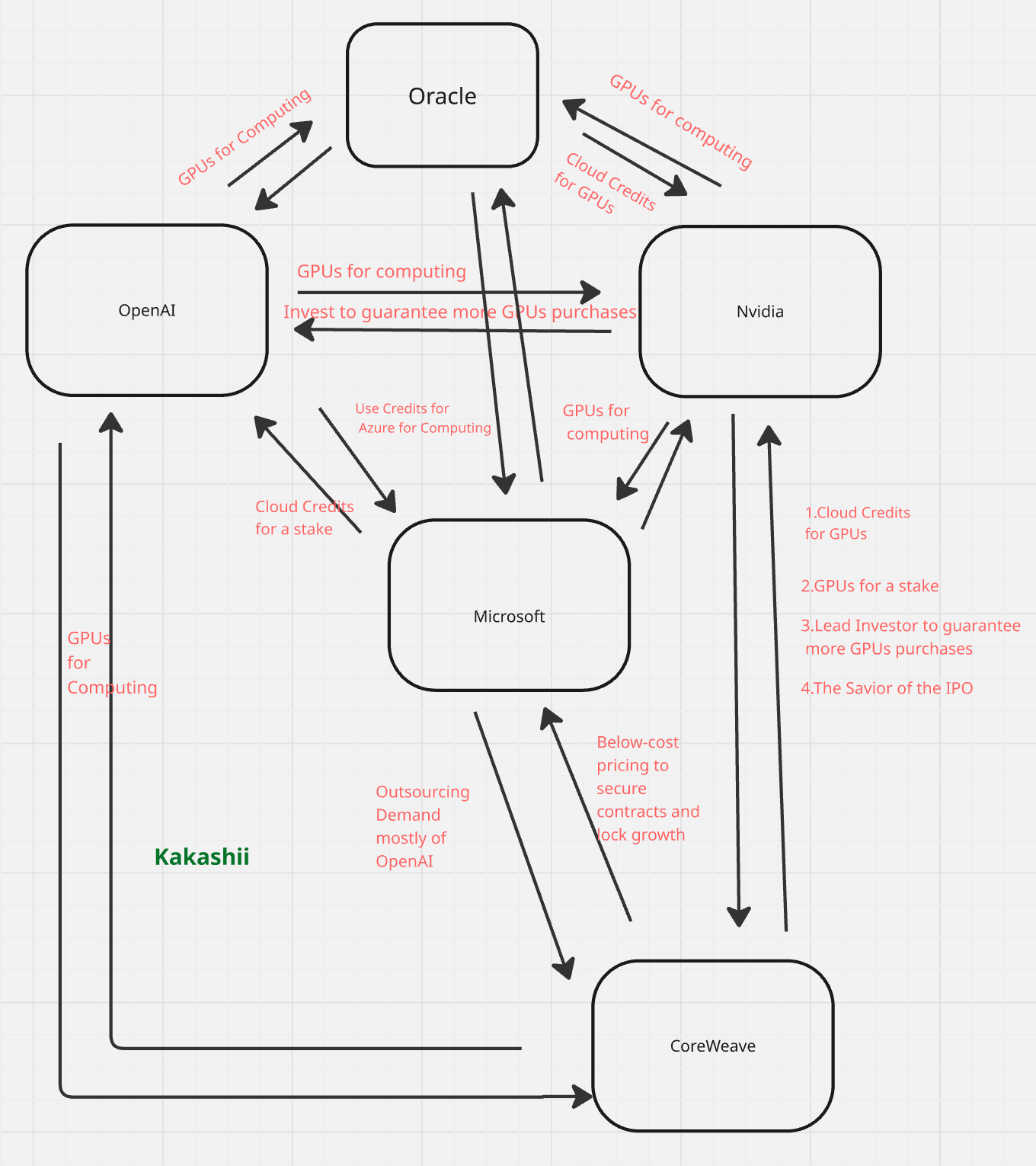

Here’s a more detailed diagram, courtesy of Kakashii. This diagram also shows Coreweave and the increasingly irrelevant Microsoft, showing the confusing web of financials where everyone is circularly funding everyone else:

And across all of this is SoftBank, which increased its stake in Nvidia to $3B and bought $170M worth of Oracle shares in early 2025. SoftBank has also invested over $10B in OpenAI across different investments, with up to $30B total committed. A leveraged investor at the core of the infinite money loop provides an even deeper point of vulnerability.

Vendor financing, or something more?

Of course, the “Infinite Money Glitch” is a bit pejorative and hyperbolic. We should acknowledge that financial arrangements of this form are not actually uncommon… and do not actually yield infinite money. It’s an arrangement more commonly called vendor financing and it happens all the time. For example, no one complains of an infinite money glitch when a car dealership offers you a loan to buy their car, even though the car dealership ends up being both the seller and the source of capital.

Companies do vendor financing frequently to move inventory that might otherwise sit unsold, lock in customers (they’re literally indebted to you), potentially earn interest income on top of product margins, book revenue earlier (though accounting rules vary on this), and gain competitive advantage over vendors who only take cash. The buyer also benefits from this arrangement by preserving cash for other needs and/or getting products they couldn’t otherwise afford.

But there’s a key risk here for the seller-lender — if your customer can’t pay you back, you’re screwed twice, as you’ve lost both the product AND the money. This dynamic led to the collapse of numerous telecom equipment companies in 2001, who provided vendor financing to startups that subsequently went bankrupt. This is important for Nvidia’s version of vendor financing which is at an extreme and unprecedented scale — NVIDIA putting up $100B is extraordinarily aggressive.

Additionally, when NVIDIA has 80-90% market share in AI training chips and nearly every major AI company needs their product, this isn’t normal vendor-customer dynamics. It’s more like a sovereign lending to its colonies - you need the currency (GPUs) to participate in the economy at all.

Thus the “infinite money trick” pejorative here is capturing something real — most vendor financing deals are a tiny fraction of the vendor’s valuation, and this is very different, with unprecedented scale and market dynamics. If AI compute demand slows or NVIDIA competitors catch up, this whole structure unwinds for NVIDIA in a very bad way. It’s vendor financing on steroids, enabled by a unique market position for NVIDIA that may not last forever.

Understanding the scale of the ambition

In speaking about the NVIDIA deal, OpenAI CEO Sam Altman said something that I hope is obvious to readers of this blog:

Everything starts with compute. Compute infrastructure will be the basis for the economy of the future.

In short, compute is a strategic resource, and OpenAI wants to have as much of it as possible.

How will OpenAI pull this off? Altman’s latest essay “Abundant Intelligence” spells out the plan:

Our vision is simple: we want to create a factory that can produce a gigawatt of new AI infrastructure every week. The execution of this will be extremely difficult; it will take us years to get to this milestone and it will require innovation at every level of the stack, from chips to power to building to robotics. But we have been hard at work on this and believe it is possible. In our opinion, it will be the coolest and most important infrastructure project ever.

To clarify, the scale here is enormous. “GW” refers to “gigawatt”, a measure of power. Each individual watt is enough power to run an old-school nightlight. A gigawatt is one billion watts — enough total power to supply roughly 750,000 homes. Altman wants to produce that each week.

Today, the largest AI data center is likely 0.3-0.5GW (xAI’s Colossus).2 There are projects to build 1GW data centers — currently these projects take about two years to produce start to finish. Altman proposes soon somehow speeding up this process 100x.

Across all of xAI, Meta, OpenAI, Google/DeepMind, Microsoft, and Amazon/AWS, there likely is 15-20GW total being used for AI.3 If not used for data centers, 20GW would be enough to power both New York City and London at the same time. Altman is talking about adding all of that every few months.

The entire United States currently has ~1300GW of total installed electrical generating capacity as of 2024.4 The US added 56GW of new capacity in 2024. Altman wants to add an equivalent amount annually, just for OpenAI’s data centers and AI.

This is a lot, to put it mildly. Achieving Altman’s vision would require nothing less than a complete reimagining of how data centers are built. This would fundamentally restructure global industrial capacity around AI infrastructure, likely requiring breakthrough technologies in modular construction, energy generation, and manufacturing automation that simply don’t exist today.

How will Altman pull this off? We will find out soon:

Over the next couple of months, we’ll be talking about some of our plans and the partners we are working with to make this a reality.

And besides Nvidia, how will OpenAI afford this? Altman isn’t yet saying.

Later this year, we’ll talk about how we are financing it; given how increasing compute is the literal key to increasing revenue, we have some interesting new ideas.

Can this dream be achieved?

Here’s how I think the AI buildout will go down.

Currently the world doesn’t have any operational 1GW+ data centers. However, it is very likely we will see fully operational 1GW data centers before mid-2026. This likely will be a part of 45-60GW of total compute across Meta, Microsoft, Amazon/AWS/Anthropic, OpenAI/Oracle, Google/DeepMind, and xAI.

My median expectation is these largest ~1GW data center facilities will hold ~400,000-500,000 Nvidia Blackwell chips and be used to train ~4e27 FLOP model sometime before the end of 2027.5 Such a model would be 10x larger than the largest model today and 100x larger than GPT-4. Each individual 1GW facility would cost ~$40B to manufacture, with ~$350B total industry spend across 2026.6

By the end of 2027, I expect7 a fully operational ~2GW facility with total AI compute across all companies reaching ~90GW. These 2GW facilities would cost ~$95-100B each to build and total industry annual spend would reach ~$500-600B.

By the end of 2028, I expect the largest single facility to be ~3GW facility, holding a ~1M chip Nvidia Blackwell/Rubin mix costing $150-165B to build, capable of a ~1e28 FLOP training run.8 A 1e28 FLOP training run represents a computational effort thousands of times greater than what was used to create GPT-4, allowing the AI to process and learn from vastly more information. Total AI data centers would reach 130GW combined, with ~$900B-1000B spent by the AI industry over 2028.

By 2029, it starts getting very fuzzy to predict and my forecasting powers break down. How AI continues to scale, whether we’ve encountered data-related or other algorithmic bottlenecks, what AI capabilities have already emerged, and how economically valuable those AI capabilities are will be key to whether the economics favor continued scaling. Needless to say, building $150B+ individual data center campuses and spending ~$1000B on AI infrastructure would get very difficult to sustain financially, let alone continue to increase dramatically year-over-year.

I’m also unsure about how well all the physical supply chains across compute and other forms of manufacturing will continue to support this level of scale or whether we can continue to get the actual energy buildout needed. You can only build physical infrastructure so fast. Whether we are still limited to training frontier AI systems in single data center campuses versus being capable of distributed training across geographically distributed data centers will matter a lot. The unit economics of training versus inference in allocation of compute will matter a lot. Additionally, wars or major regulation could significantly alter the picture. Substantial and rapid AI scaling beyond 1e28 FLOP requires many many different things to all go right. Thus, it’s plausible we could see a plateau of sorts around ~1e28 FLOP, or 1000x larger than GPT-4.

However, on an optimistic path where bottlenecks are resolved and AI is immensely economically valuable and generating sufficient financial returns to finance further AI scaling, 2029 might involve ~4GW facilities each costing $210-240B, with total compute reaching 180GW and total spending reaching ~$1200B-1400B annually. We could then see a ~1e29 FLOP model9 trained by the end of 2030, which would be 10,000x larger than GPT-4. If things keep going on the optimistic path, we also might finally be adding 1GW/week of AI infrastructure in 2030, but across all US AI companies, not just OpenAI.

This may sound fanciful. And keep in mind that even the not-so-optimistic scenario is still a very aggressive timeline involving very aggressive build-outs. This necessarily already prices in very aggressive investments from OpenAI and others that make the $100B NVIDIA investment look small. Many more headlines about $100B+ investments will need to occur regularly to keep this money-infrastructure train on track.

What does that get us? Some say that AI scaling is dead, but the rumors of scaling’s death have been greatly exaggerated. As I mentioned in my review of GPT-5:

GPT-5 should only be disappointing if you had unrealistic expectations — GPT-5 is very on-trend and exactly what we’d predict if we’re still heading to fast AI progress over the next decade.

While much is still uncertain, the financing and requisite infrastructure build-out suggests progress towards AGI is very much still on schedule, probably sometime in the early-to-mid 2030s. Get ready for the next five years, and we will truly see what some scaled AI models can do!

OpenAI is not the only hyperscaler

Another thing people might read into the announcement is that these investments suggest OpenAI is running away with it and is potentially on track to be the leader in frontier AI development. However, while OpenAI is being the loudest and most openly ambitious, they aren’t the only ones out there. In fact, all of xAI (Colossus 2), Meta (Prometheus), Amazon (Project Rainier), and Google (no catchy name unfortunately) are poised to have 1GW data centers by the end of the year, and all companies likely have what it takes to keep on going.

Hopefully the ability for OpenAI and Google to remain on the frontier in infrastructure buildout is obvious. For xAI, Elon Musk clearly still has the skills to raise the relevant capital and build infrastructure incredibly quickly. However, xAI’s financial footing also appears questionable, trying to justify a valuation much higher than Anthropic while most of their revenue appears to be inter-company transfers from Twitter. xAI has also recently started bleeding talent and it’s unclear how this will affect the company long-term.

Meta has had an impressive talent pivot, buying up superstar AI engineers, but their infrastructure pivot has been equally dramatic. They scrapped their entire data center playbook and are now building GPU clusters in “tents” — prefabricated structures prioritizing speed over redundancy. It’s not yet clear if Meta will be able to build frontier AI models that compete with OpenAI, Anthropic, Google, and xAI, but I wouldn’t count them out yet.

I’m most concerned about Anthropic. They’re making a big bet on Amazon, but it’s not clear if Amazon is in turn going to give them the capital needed to compete with the other players. Amazon also is betting a large amount themselves on their Trainium chips, eschewing NVIDIA chips, which are less proven. So far Anthropic has done a good job staying on the AI frontier, but it’s not clear if they can continue to do so year over year as the scale keeps getting bigger. But Amazon has a lot of capital available, so don’t count Amazon and Anthropic out just yet.

Lastly, we should mention the Chinese AI companies. Many Chinese companies, such as DeepSeek, Alibaba (Qwen), Zhipu AI (GLM), and MoonshotAI (Kimi) have an explicit focus these days on building AGI. But they’re just not currently on track to spend on the level of America. Alibaba’s $52B USD infrastructure plan sounds impressive until you realize it’s over multiple years and includes all cloud/AI spending, not just frontier AI training, and Alibaba’s cash generation is much lower than American companies. Additionally, the other Chinese AI companies are much smaller startups without deep access to capital. Lastly, US-led export controls bite hard here, preventing the build up of necessary chips, high-bandwidth networking, liquid cooling infrastructure, and fault-tolerant training systems even if the capital was there.

The economy is increasingly a leveraged bet on AGI

The reason we should be somewhat concerned — or at least curious — about this infinite money glitch is twofold. Firstly, AGI might lead to the serious destruction of everything we value and love, if not the extinction of the entire human race. Secondly, and much more mundane by comparison, because NVIDIA currently represents approximately 7% of the S&P 500’s total market capitalization. Add in Microsoft, Google, Meta, Amazon, and other companies whose valuations assume continued AI progress, and you’re looking at perhaps 25-30% of total market value predicated on AI transformation happening roughly on schedule.

In other words, AGI happening soon may mean the end of humanity, but at least the S&P 500 will remain strong. On the other hand, if the AI scaling hypothesis hits unexpected walls, the unwinding could be a second ‘dot com bust’ or worse.10 When everyone is both buyer and seller in circular deals, you’ve created massive correlation risk. If OpenAI can’t pay Oracle, Oracle can’t pay NVIDIA, NVIDIA’s stock crashes, and suddenly 25% of the S&P 500 is in freefall.

As Elon Musk notes, the “big question is whether the infinite money glitch lasts until the infinite money AI genie arrives”.

With help from the UK and Canada.

xAI’s Colossus is reported to be at least 300MW. I am guessing it is larger at this point due to further construction since last reporting, but I don’t know for sure.

I am uncertain about this and just estimating based on what is publicly available information. This is an estimate, not a definitive fact.

Note this is capacity (maximum potential output). Actual generation was 4,178 TWh in 2023 - capacity factor varies wildly by source (nuclear runs at ~90%, solar at ~25%).

Note that GB300 improves FP4 to 1.5e16 FLOP/s per package (1.5x over GB200), suggesting the 4e27 FLOP projection could utilize early FP4 capabilities if NVFP4 techniques mature by then.

Microsoft is at $80B for FY2025 and increasing to $120B/yr. Google (Alphabet) is at $75B for 2025 and increasing to $85B, with the majority going toward “technical infrastructure, primarily for servers, followed by data centers and networking”. Amazon is at ~$105B in 2025, with CEO Andy Jassy saying the “vast majority” is for AI infrastructure in AWS. Meta announced $60-65B for 2025.

Again, median expectation (that is 50% likely to be higher, 50% likely to be lower).

The ~1e28 FLOP projection assumes early Rubin deployment mixed with Blackwell infrastructure. Pure Rubin NVL144 CPX systems achieve significantly higher efficiency: 5,000 racks at 2 GW could theoretically deliver ~8e28 FLOP in 4 months at FP4 precision with 30% utilization. The 1e28 estimate reflects a conservative (a) mixed-generation deployment rather than full next-gen capacity, (b) uncertainty about achieving FP4 training parity despite promising initial NVFP4 results, and (c) uncertainty about training duration.

Full-year training on pure Rubin systems with FP4 could potentially achieve ~1e29 FLOP.

Though much less worse than extinction.

I got a comment privately that I want to post:

>> This contrasts the inflation-adjusted $37B spent on the Manhattan project around 1941 to the $400B+ AI bet in 2025, implying it's 10x bigger than the Manhattan project.

> However, if measured as a % of GDP, it's similar (roughly 1-2%). Which is still notable, but 1x vs 10x is an important difference. I think this is important to point out given significant population and TFP growth since 1941.

I got a comment privately that I want to post:

> I would point out that Nvidia is distinct from the old telcos in an important ways, which is that it has 75%+ margins, so if it keeps the fraction of it's sales in this kind of deal low it's not really an existential risk for them.

> Also "Altman proposes soon somehow speeding up this process 100x" regarding data centers taking 2 years seems a little misleading - he's not talking about building one from scratch in a week, presumably, but rather starting 50 projects a year such that one is finishing every week I guess