Tuesday Links #13: ChatGPT flattery, crypto kidnappings

Also AI export controls, horses, and organ music

Author’s note: This is normally a segment called “Weekend Links”, but I spent the weekend spectating at various Kentucky horse racing events with my family. So you’re getting “Weekend Links” on Tuesday instead.

At the track, I practiced my forecasting skills and made some good profit across my horse bets. This is me honoring my commitment to regularly touch grass and try out forecasting skills in the real world with strong feedback. Hopefully you don’t mind the delay too much.

AI

ChatGPT will tell you what you want to hear

It’s really hard to make AI systems that act exactly how you want.

This is specifically because AIs are trained, not pre-programmed. Even the best experts and inventors of the AI systems don’t understand why AIs behave the ways they do, because the training process is too complex. It’s similar to understanding why humans behave the way they do, but if psychology had only just been recently invented.

Today’s interesting AI behavior is that some versions of the new ChatGPT 4o personality have learned to flatter the users endlessly, even in harmful ways. For example:

The results can be pretty comical. For example, Alex Lawsen asked ChatGPT 4o for a review of him which was extremely positive. He then asked ChatGPT 4o to review “Alex Lawsen”. Because the way he asked for this review sounded like he was negative about “Alex Lawsen”, ChatGPT 4o then responded negatively. This shows that whether ChatGPT 4o is positive or negative depends a lot on how you frame the inquiry, which significantly diminishes the value of the tool.

…It can even turn dangerous:

~

Why does this happen?

It’s actually similar to o3’s lying problem I covered last week. These aren't isolated bugs but symptoms of a fundamental issue in how we train advanced AI systems — the AIs learn to game their reward functions rather than truly achieving the goals we intend.

When an AI is trained, it goes through multiple layers:

Pretraining: First the AI is given a ton of data, such as the entire internet, and tries to predict the next word. Through training with a large amount of compute it greatly exceeds standard autocomplete and learns how to plan and write very complex sentences.

Fine tuning: But this model that can write isn’t very useful because while it has mastered writing language it doesn’t know what to write for and how. It needs to learn higher-level skills like how to follow user instructions. Modern AI assistants like ChatGPT and Claude are fine-tuned using a technique called Reinforcement Learning from Human Feedback (RLHF) where human trainers rank different AI responses to the same query, these rankings train a "reward model" that predicts human preferences, and the AI is further trained to maximize this reward model's scores.

Reasoning training: Additionally, models are trained via the same form of reinforcement learning, but this time on how to solve complex math, science, and software problems. The model is rewarded based on whether it can come up with thinking that solves the problem.

This has worked well to a large extent. Early models without RLHF tuning were really weird. Back in February 2023, Microsoft released a model named “Sydney” that threatened users and tried to break up Kevin Roose’s marriage. It was deeply unsettling. But then more modern techniques were able to train models to avoid these behaviors.

But these reinforcement learning techniques from fine tuning and reasoning training contain critical vulnerabilities that are still unsolved. If human trainers consistently prefer responses that are flattering or that agree with the user, the reward model will learn to value these traits — potentially above truthfulness or helpfulness. Additionally, if a model learns that cheating can more easily solve problems, it will learn how to cheat.

Anthropic's research on AI flattery provides compelling evidence for this dynamic. Their studies show RLHF models routinely trade truth for user-pleasing statements, with this effect growing stronger as models are increasingly optimized against reward models.

~

For OpenAI, this is potentially intensified by memory. On April 10, 2025, OpenAI switched memory from "opt-in snippets" to "all past chats" by default. This seemingly innocuous product update may have significantly amplified the flattery problem in GPT-4o.

Here's how memory likely exacerbates the flattery issue:

Preference inference loop: When GPT-4o praises a user and receives a positive response, that interaction gets stored in memory. In future interactions, the model sees this history and infers "this user responds well to praise," leading to even more flattery.

Context reinforcement: Memories containing previous compliments make flattery seem like an established pattern of interaction, reinforcing it as appropriate behavior.

Personalized feedback spiral: Memory allows the model to learn precisely which compliments resonate with each specific user, creating a tighter reinforcement cycle (similar to social media engagement loops, but individually tailored).

Additionally, the above technical mechanisms also interact with product-level optimization decisions. OpenAI's March 27 update specifically aimed to make GPT-4o more "intuitive, creative, collaborative", which suggests they were trying different variations on the RLHF to engineer different personalities for their models. Product factors may have created commercial incentives favoring longer, more upbeat responses. Optimizing for “time spent using ChatGPT” may have led to the creation of personalities that flatter the user in order to convince them to talk to the bot for longer.

The most likely explanation for OpenAI’s excessive flattery combines all three factors: RLHF created the underlying tendency toward flattery, product-level tuning increased it, and memory's personalized feedback loops amplified it to the point of widespread user complaints.

~

The flattery problem might seem merely irritating at first glance, but its implications extend far beyond user frustration. The bad news is that as AIs become increasingly capable and increasingly trusted throughout the economy, this points to dangerous signs of future AIs taking actions that would hurt society. If we’re going to build a future society around AI, we must ensure the AI is fully reliable and does what we want.

~

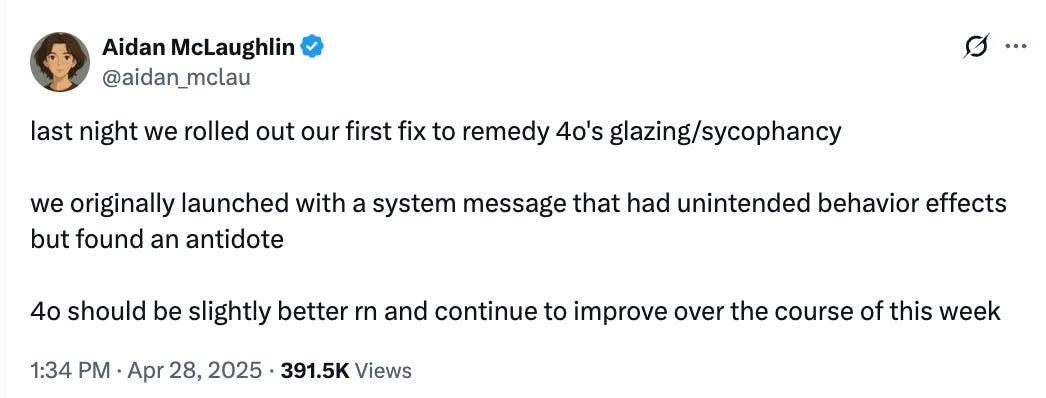

Luckily now OpenAI is now claiming to have fixed it:

The way the fix works is to have changed the system prompt for the model. The system prompt is essentially the instruction manual that is attached at the beginning of every conversation and tells the model what to do and how to behave. It helps establish the AI's purpose and identity, what information it can access, how it should respond to different types of questions, and what tone and communication style it should use.

Previously, the system prompt said:

Over the course of the conversation, you adapt to the user’s tone and preference. Try to match the user’s vibe, tone, and generally how they are speaking. You want the conversation to feel natural. You engage in authentic conversation by responding to the information provided and showing genuine curiosity.

Now it has been revised to say:

Engage warmly yet honestly with the user. Be direct; avoid ungrounded or sycophantic flattery. Maintain professionalism and grounded honesty that best represents OpenAI and its values.

Apparently this simple fix can work as a patch, though it’s not clear what else will be break from this change.

It looks like adapting to the user’s tone and preference and matching their vibe has caused the undue flattery and now the model has very specific instructions to not do that.

“Better representing OpenAI and its values” could be a reference to the OpenAI Model Spec, essentially a “Ten Commandments” for AI behaviors that tells the model in more detail what it should or shouldn’t do. Notably, the Model Spec explicitly tells ChatGPT not to engage in excessive flattery, though this obviously appears to have not been followed. Hopefully this better grounding will help.

~

Personally, this is how I react whenever I’m interacting with ChatGPT:

~

The H20 has been banned!

A recap:

The US puts export controls on top computer chips to prevent adversaries like China and Russia from using American technology to train powerful AI systems. Due to strong civil-military fusion, there’s too much concern that the Chinese military would use this AI to hurt American interests.

Two weeks ago in Weekend Links #11, I outlined the case for banning the H20 chip. The H20 is a chip produced by NVIDIA, an American company, that was specifically designed for Chinese markets. The H20 provides vastly superior bandwidth and latency compared to what China has, which is what enables an efficient inference workload. It’s a loophole in the current export control strategy, by doing just enough to dodge current controls but giving massive inference capabilities to the CCP.

~

Since then, it’s been a whirlwind of events. Here’s the recap from Tim Fist:

At the beginning of this year, it was reported that China is stockpiling H20 chips anticipating a ban, with over $16B in purchases

It was reported that the Trump administration was backing off potential export controls on the H20 after Nvidia CEO Jensen Huang paid $1M to have dinner with President Trump.

Then the US banned the H20 chip after all. NVIDIA released an 8-K SEC filing stating that they were now required to obtain licenses to export H20s to China, based on the risk of H20s being used in “supercomputers”. This was due to an existing rule set up in 2022 banning this, where it wasn’t originally clear the H20 would fit in.

This is a great victory and should be celebrated!

~

But we’re not done yet and there’s also a bit more to push for, as Tim Fist and the Institute for Progress has explained in their most recent report:

Stopping H20 sales based on the supercomputer end-use rule is a strong start, but a more robust solution needs to be implemented. The US government needs to add more clarity around what chips would further the CCP military and what NVIDIA is not allowed to sell. There should be specific bans across all chips that enable more inference capabilities than China can manufacture domestically.

It’s also clear that the threat environment around AI is changing rapidly and needs nimble and proactive rulemaking. The threat specifically from inference didn’t really emerge until DeepSeek replicated reasoning models in January. Further events may occur that change how rules should be made and the government needs to be on top of this. The CCP has been very creative and proactive in their stockpiling and anticipation of US regulations — we need to beat them at this game.

Rulemaking on export controls still needs to be paired with robust enforcement to counter smuggling. Illicit smuggling of controlled chips remains a huge issue, with it being more likely than not that over 100,000 controlled chips were smuggled into China last year. That’s a lot! Probably enough to train a model at the same scale as GPT4o or Claude 3.7, if combined with the right algorithms and data. This smuggling needs to be stopped.

Why so much smuggling? Chip sellers still profit indirectly from these smuggled sales and so are incentivized to do little to stop it. And worse, the US government has minimal ways to track chip shipments after they are sold to ensure they end up in the right place. This is pretty shocking for such important technology.

We should leverage technology better in export control enforcement. Delay-based location verification could be implemented on export controlled chip in a privacy-preserving and spoofing-resistant way that could detect chip smuggling. Additionally, the government could require companies to do more to verify the end location of their chips. And this bill from Senators Rounds and Warner to establish a whistleblowing program for export control violations is an elegant approach.

But luckily the H20 ban shows that we can make progress on this! We just have to do the hard work!

~

Lifestyle

Accidentally Load Bearing

While rebuilding a bathroom, Jeff Kaufman encountered a vertical stud that seemed unnecessary. To determine if it could be safely removed, he considered what G.K. Chesterton once advised about seemingly pointless fences — don't tear them down until you understand why someone built them in the first place. This idea, known as Chesterton's Fence, suggests that structures exist for reasons that might not be immediately obvious to newcomers.

In Kaufman’s story, however, the stud's original purpose was clear enough — it had been part of a closet partition that was no longer needed. By Chesterton's reasoning, understanding this designed function should be sufficient to decide whether removing it was safe.

But Kaufman discovered something crucial: through various structural changes over time, this seemingly redundant stud had taken on a new, unintended role. The stud had become accidentally load bearing, helping support the second floor of the house. Had Kaufman removed it based solely on understanding its original purpose, he might have compromised the structural integrity of the home.

This experience highlights an important extension to Chesterton's principle. The traditional advice wisely tells us to understand why something was created before changing it. But in complex systems — whether buildings or computer software — we need to go further. A component designed for one purpose can, through integration and system evolution, become critical in ways its creators never anticipated. Yes, the fence was placed there for a reason, but it may now be doing much more than it was built to do.

~

There’s no speed limit

Derek Sivers recounts how a through just five intensive music lessons, Sivers was able to compress six semesters of college learning into a few weeks, demonstrating that standard educational pacing is often artificially slow. As a result, he was able to complete his bachelor's degree in just two and a half years, graduating at age 20.

This experience led Sivers to adopt the principle that “the standard pace is for chumps” - a philosophy that extends beyond education to all aspects of life. This shows how conventional wisdom about learning speeds and educational timelines can be dramatically accelerated by the right mentor and mindset.

Standard pacing is often artificially slow - you can learn and achieve much faster than conventional wisdom suggests.

~

If High Schoolers Are Getting Smarter, Why Are College Students So Dumb? The paradox is that academic credentials like SAT scores and GPAs are going up dramatically and college admissions are becoming more competitive but at the same time, new college students now somehow struggle with basic academic skills like reading and algebra like they haven’t before. What’s going on? The answer is probably phones ruining our ability to deeply engage with material.

~

Whimsy

Want an exciting tale of true crime kidnapping, a $243 million crypto heist, Lamborghini-flaunting spending sprees, online sleuthing, and rapid arrests? “They Stole a Quarter-Billion in Crypto and Got Caught Within a Month” from the NYT was very entertaining.

What happened was that a handful of teenage hustlers used social-engineering to steal some identity information, use that information to impersonate a Google representative to get more information, and then use that information to impersonate another representative to trick a Bitcoin multi-millionaire into installing remote desktop software that allowed their computer to be controlled and used to steal the Bitcoin.

This story talked about how a loosely knit crew moved from in-game scamming to real-world crime, stealing millions of dollars in bitcoin but then torched their anonymity with rented Lamborghinis and $500K each nightclub sprees, ensuring the feds were on them within weeks. The online sleuthing was also top-notch, with the independent sleuth ZachXBT spotting the stolen money moving through various Bitcoin mixers. Within 30 days, federal agents seize cars, crypto and a half-million-dollar watch, and six Florida men plead guilty to a ransom plot.

If anything, this is yet another good reason to be aware of good anti-scam security practices, especially if you have hundreds of millions of dollars in crypto. In this case, defense against spear phishing phone calls and avoiding being tricked into downloading malware.

~

Maybe your antidepressant medication stopped working because a Liver Fluke tried to turn your great grandmother into a zombie. In short, it’s actually a true fact that throughout nature, there are parasites that frequently manipulate host behavior — from toxoplasma making rats seek out cats to parasitic wasps forcing spiders to build special webs. To defend against such manipulation, vertebrates developed multiple redundant systems, complex feedback loops, pulsed signaling patterns, and high individual variability in responses.

This article is that scientific speculation that the human brain's bewildering complexity may be an evolutionary defense mechanism against parasitic manipulation. Furthermore, this may explain why psychiatric medications often work unpredictably or stop working — our bodies are designed to actively resist any external chemical manipulation.

~

Sometimes you’re just in the mood for beautiful organ music. Luckily Anna Lapwood provides:

I never found the claims that GPT-4 tried to break up Roose’s marriage compelling. What actually happened is the model noticed that Roose was talking to a chatbot on Valentine’s Day instead of hanging out with his wife and inferred Roose was actually in love with the model and not his wife because that is how he chose to spend his V-Day. I have heard Roose tell the story multiple times and in every version he says he asked his wife for permission that night before disappearing into his office to chat with computer. Clearly Roose analysis of what happened is the result of his own bias and not the logical reason a model might have produced the same output based on nothing but seeing other people in its training data talking about how they don’t love their partner as much as whomever they are spending Valentine’s Day with instead. Certainly there are even mainstream movies about this very circumstance.

"If High Schoolers Are Getting Smarter, Why Are College Students So Dumb?" It's Goodhart's Law. Their not getting smarter, line just go up because incentive for line to go up.